How to Create LRU Cache in Python

What is an LRU Cache?

For any software product, application performance is one of the key quality attributes. One common technique used for improving performance of a software application is to use memory cache. A memory cache puts frequently used application data in the fast RAM of the computing device. Reading or writing data to an in memory cache is usually much much faster than reading/writing data from a database or a file. However, there is a limit to the amount of data that you can cache in memory since the system RAM is a limited and expensive resource.

So in practical applications, you set a limit to cache size and then you try to optimise the cache for all your data requirements. One approach used for balancing cache size is the LRU cache. In an LRU(Least Recently Used) cache, whenever the cache runs out of space, program will replace the least recently used item in cache with the data you want to cache. In an LRU cache, the algorithm keeps track of all cache items and how recently each one was used relative to each other.

Any generic cache implementation has two main operations,

- get(key) - Get a cache entry given its unique identifier key.

- put(key,value) - Insert a cache entry with its unique identifier.

In an LRU cache, the put() and get() will have basic internal implementation to manage how recently the cache entries was accessed. In put() operation, LRU cache will check the size of the cache and it will invalidate the LRU cache entry and replace it with the new one if the cache is running out of space.

If you are using Python 3, you can either build your own LRU cache using basic data structures or you can use the built-in LRU cache implementation available in functools.lru_cache(). In this article, I will start with the basic data structure solution since it enables you to understand the LRU concept better.

How to Create an LRU Cache in Python?

Let us now create a simple LRU cache implementation using Python. It is relatively easy and concise due to the features of Python. The following program is tested on Python 3.6 and above.

Python provides an ordered hash table called OrderedDict which retains the order of the insertion of the keys. Hence this order can be used to indicate which entries are the most recently used. Here is the strategy followed in the python program given below,

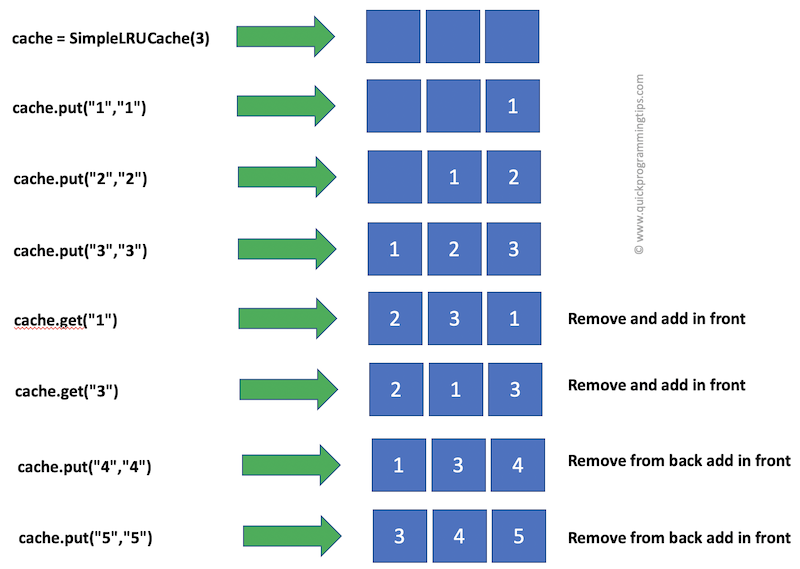

- Whenever get() is invoked, the item is removed from dictionary and then added at the end of the ordered keys. This ensures that recently used items are always at the end of the dictionary.

- Whenever put() is invoked, if we run out of space, the first entry in ordered keys is replaced with the latest entry. This works because every get() is moving items to the end of the ordered keys and hence first item is the least recently used item!

import collections

class SimpleLRUCache:

def __init__(self, size):

self.size = size

self.lru_cache = collections.OrderedDict()

def get(self, key):

try:

value = self.lru_cache.pop(key)

self.lru_cache[key] = value

return value

except KeyError:

return -1

def put(self, key, value):

try:

self.lru_cache.pop(key)

except KeyError:

if len(self.lru_cache) >= self.size:

self.lru_cache.popitem(last=False)

self.lru_cache[key] = value

def show_entries(self):

print(self.lru_cache)

# Create an LRU Cache with a size of 3

cache = SimpleLRUCache(3)

cache.put("1","1")

cache.put("2","2")

cache.put("3","3")

cache.get("1")

cache.get("3")

cache.put("4","4") # This will replace 2

cache.show_entries() # shows 1,3,4

cache.put("5","5") # This will replace 1

cache.show_entries() # shows 3,4,5

The following diagram shows how the LRU cache works in the above implementation.

How to Create an LRU Cache in Python Using functools?

Since LRU cache is a common application need, Python from version 3.2 onwards provides a built-in LRU cache decorator as part of the functools module. This decorator can be applied to any function which takes a potential key as an input and returns the corresponding data object. When the function is called again, the decorator will not execute function statements if the data corresponding to the key already exists in the cache!

from functools import lru_cache

@lru_cache(maxsize=3)

def get_data(key):

print("Cache miss with "+key)

# A costly I/O usually implemented below

return key + ":value"

print(get_data("1"))

print(get_data("2"))

print(get_data("3"))

print(get_data("4"))

print(get_data("4"))

print(get_data("3"))

print(get_data("1"))